A cached value it’s dynamic data, expensive to calculate, that we make it constant during a period of time.

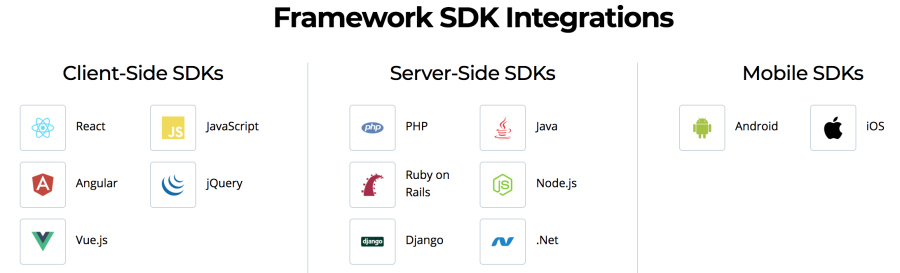

There are several good modules in npm world but sometimes we don’t have enough flexibility in terms of customizations. In my case, I invested more time trying to tweak an existing module than develop my own

Caching data can be as easy as a collection in memory that holds our most used records for the whole life cycle of our web app.

For sure, that approach does not scale if our web app receives several hits or if we have a huge amount of records to load in memory.

There is caching at different levels such as web or persistence. This implementation will cache data at the persistence layer.

Take this implementation as a starting point. Probably won’t be the most optimized solution to resolve your problem. There’s no magic, you will have to spend some time until you get good results in terms of performance

Among other reasons you will cache because:

- Reduce response time

- Reduce the stress of your database

- Save money: less CPU, less memory, fewer instances, etc.

- Let’s code a simple solution as a first attempt.

If you want to go straight to the source code, go ahead https://github.com/andrescanavesi/node-cache

I will use Express js framework and Node.js 12

$ express --no-view --git node-cache

create : node-cache/

create : node-cache/public/

create : node-cache/public/javascripts/

create : node-cache/public/images/

create : node-cache/public/stylesheets/

create : node-cache/public/stylesheets/style.css

create : node-cache/routes/

create : node-cache/routes/index.js

create : node-cache/routes/users.js

create : node-cache/public/index.html

create : node-cache/.gitignore

create : node-cache/app.js

create : node-cache/package.json

create : node-cache/bin/

create : node-cache/bin/www

change directory:

$ cd node-cache

install dependencies:

$ npm install

run the app:

$ DEBUG=node-cache:* npm start

$ cd node-cache

$ npm install

Open the file app.js and remove the line

app.use(express.static(path.join(__dirname, 'public')));

Install nodemon to auto-restart our web app once we introduce changes

npm install nodemon --save

Open the file package.json and modify the line

"start": "nodemon ./bin/www"

Before was:

"start": "node ./bin/www"

Install the tools needed for tests

npm install mocha --save-dev

npm install chai --save-dev

npm install chai-http --save-dev

npm install nyc --save-dev

npm install mochawesome --save-dev

npm install randomstring --save-dev

Maybe you already know mocha and chai but we also installed some extra tools:

- nyc: code coverage.

- mochawesome: an awesome HTML report with our test results.

- randomstring: to generate some random data

After installing them we need to make some configurations:

- Create a folder called tests in the root of our project.

- In our .gitignore file add reports folder to be ignored tests/reports/

- Open package.json file and add a test command at scripts

"scripts": {

"start": "nodemon ./bin/www",

"test": "NODE_ENV=test nyc --check-coverage --lines 75 --per-file --reporter=html --report-dir=./tests/reports/coverage mocha tests/test_*.js --recursive --reporter mochawesome --reporter-options reportDir=./tests/reports/mochawesome --exit"

},

Create a file tests/test_cache.js with some basic stuff in order to test configuration

const app = require("../app");

const chai = require("chai");

const chaiHttp = require("chai-http");

const assert = chai.assert;

const expect = chai.expect;

const {Cache} = require("../utils/Cache");

// Configure chai

chai.use(chaiHttp);

chai.should();

describe("Test Cache", function() {

this.timeout(10 * 1000); //10 seconds

it("should cache", async () => {

//

});

});

Execute tests to make sure everything is configured

npm test

The output will be something like this:

Test Cache

✓ should cache

1 passing (6ms)

[mochawesome] Report JSON saved to /Users/andrescanavesi/Documents/GitHub/node-cache/tests/reports/mochawesome/mochawesome.json

[mochawesome] Report HTML saved to /Users/andrescanavesi/Documents/GitHub/node-cache/tests/reports/mochawesome/mochawesome.html

ERROR: Coverage for lines (29.41%) does not meet threshold (75%) for /Users/andrescanavesi/Documents/GitHub/node-cache/routes/index.js

ERROR: Coverage for lines (50%) does not meet threshold (75%) for /Users/andrescanavesi/Documents/GitHub/node-cache/daos/dao_users.js

ERROR: Coverage for lines (28.57%) does not meet threshold (75%) for /Users/andrescanavesi/Documents/GitHub/node-cache/utils/Cache.js

npm ERR! Test failed. See above for more details.

Tests failed and that’s ok because we did not implement any test. Let’s implement it later.

Cache implementation

Create a utils folder and our Cache.js file

/**

* @param name a name to identify this cache, example "find all users cache"

* @param duration cache duration in millis

* @param size max quantity of elements to cache. After that the cache will remove the oldest element

* @param func the function to execute (our heavy operation)

*/

module.exports.Cache = function(name, duration, size, func) {

if (!name) {

throw Error("name cannot be empty");

}

if (!duration) {

throw Error("duration cannot be empty");

}

if (isNaN(duration)) {

throw Error("duration is not a number");

}

if (duration < 0) {

throw Error("duration must be positive");

}

if (!size) {

throw Error("size cannot be empty");

}

if (isNaN(size)) {

throw Error("size is not a number");

}

if (size < 0) {

throw Error("size must be positive");

}

if (!func) {

throw Error("func cannot be empty");

}

if (typeof func !== "function") {

throw Error("func must be a function");

}

this.name = name;

this.duration = duration;

this.size = size;

this.func = func;

this.cacheCalls = 0;

this.dataCalls = 0;

/**

* Millis of the lates cache clean up

*/

this.latestCleanUp = Date.now();

/**

* A collection to keep our promises with the cached data.

* key: a primitive or an object to identify our cached object

* value: {created_at: <a date="">, promise: <the promise="">}

*/

this.promisesMap = new Map();

};

/**

* @returns

*/

this.Cache.prototype.getStats = function(showContent) {

const stats = {

name: this.name,

max_size: this.size,

current_size: this.promisesMap.size,

duration_in_seconds: this.duration / 1000,

cache_calls: this.cacheCalls,

data_calls: this.dataCalls,

total_calls: this.cacheCalls + this.dataCalls,

latest_clean_up: new Date(this.latestCleanUp),

};

let hitsPercentage = 0;

if (stats.total_calls > 0) {

hitsPercentage = Math.round((this.cacheCalls * 100) / stats.total_calls);

}

stats.hits_percentage = hitsPercentage;

if (showContent) {

stats.content = [];

for (let [key, value] of this.promisesMap) {

stats.content.push({key: key, created_at: new Date(value.created_at)});

}

}

return stats;

};

/**

* @param {*} key

*/

this.Cache.prototype.getData = function(key) {

if (this.promisesMap.has(key)) {

console.info(`[${this.name}] Returning cache for the key: ${key}`);

/*

* We have to see if our cached objects did not expire.

* If expired we have to get freshed data

*/

if (this.isObjectExpired(key)) {

this.dataCalls++;

return this.getFreshedData(key);

} else {

this.cacheCalls++;

return this.promisesMap.get(key).promise;

}

} else {

this.dataCalls++;

return this.getFreshedData(key);

}

};

/**

*

* @param {*} key

* @returns a promise with the execution result of our cache function (func attribute)

*/

this.Cache.prototype.getFreshedData = function(key) {

console.info(`[${this.name}] Processing data for the key: ${key}`);

const promise = new Promise((resolve, reject) => {

try {

resolve(this.func(key));

} catch (error) {

reject(error);

}

});

const cacheElem = {

created_at: Date.now(),

promise: promise,

};

this.cleanUp();

this.promisesMap.set(key, cacheElem);

return promise;

};

/**

* @param {*} key

*/

this.Cache.prototype.isObjectExpired = function(key) {

if (!this.promisesMap.has(key)) {

return false;

} else {

const object = this.promisesMap.get(key);

const diff = Date.now() - object.created_at;

return diff > this.duration;

}

};

/**

* Removes the expired objects and the oldest if the cache is full

*/

this.Cache.prototype.cleanUp = async function() {

/**

* We have to see if we have enough space

*/

if (this.promisesMap.size >= this.size || Date.now() - this.latestCleanUp > this.duration) {

let oldest = Date.now();

let oldestKey = null;

//iterate the map to remove the expired objects and calculate the oldest objects to

//be removed in case the cache is full after removing expired objects

for (let [key, value] of this.promisesMap) {

if (this.isObjectExpired(key)) {

console.info(`the key ${key} is expired and will be deleted form the cache`);

this.promisesMap.delete(key);

} else if (value.created_at < oldest) {

oldest = value.created_at;

oldestKey = key;

}

}

//if after this clean up our cache is still full we delete the oldest

if (this.promisesMap.size >= this.size && oldestKey !== null) {

console.info(`the oldest element with the key ${oldestKey} in the cache was deleted`);

this.promisesMap.delete(oldestKey);

}

} else {

console.info("[cleanUp] cache will not be cleaned up this time");

}

};

/**

* Resets all the cache.

* Useful when we update several values in our data source

*/

this.Cache.prototype.reset = function() {

this.promisesMap = new Map();

this.latestCleanUp = Date.now();

this.cacheCalls = 0;

this.dataCalls = 0;

};

Full source code: https://github.com/andrescanavesi/node-cache